You’re going to hear a lot about HDMI 2.1 in 2019. It’s the latest iteration on the A/V connection format that consumers have used since it replaced previous A/V connections nearly two decades ago, and with 8K TVs ready to beat down the door into your living room, HDMI 2.1 is a necessary and much-anticipated upgrade.

While HDMI 2.1 ports and cables will look identical to those we use now, this newest update—which will inevitably replace the HDMI 2.0 standard that was introduced in 2013—has quite a few differences from previous versions. It’s packed with new features and will be capable of delivering incredibly high-quality video. It’s also more complicated and more restrictive in some ways than previous HDMI versions.

Advertisement

This guide will explain the differences between HDMI 2.1 and the current 2.0, what it means for your TV and home theater devices, and whether you should buy a new TV because of it (spoiler: you shouldn’t).

HDMI 2.1 versus 2.0 (and 2.0a and 2.0b)

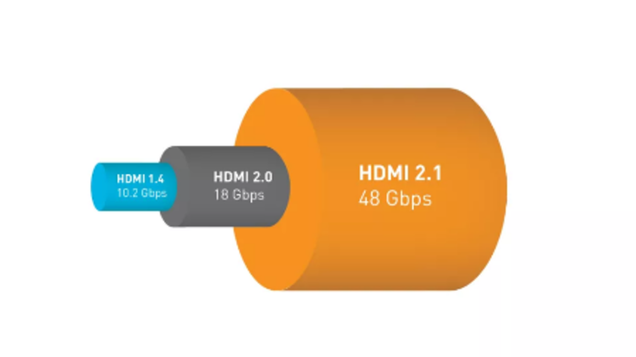

HDMI technology has gone through several revisions and updates over the years. The current standard, HDMI 2.0, replaced HDMI 1.4 in 2013 and updated the technology to support 4K Ultra High Def (UHD) video at 60 frames-per-second, plus a number of AV features. Better still, it didn’t require you to have to travel to the dust-covered lands behind your TV and swap out all your HDMI cables.

Advertisement

Two interstitial updates—2.0a and 2.0b—expanded High Dynamic Range (HDR) support for HDMI 2.0, but are otherwise identical to 2.0 and also use the same cables.

HDMI 2.1, on the other hand, is an entirely different beast.

The main difference is that HDMI 2.1 increases your maximum signal bandwidth from 18Gbps (HDMI 2.0) to 48Gbps, which enables video resolutions of up to 10K and frame rates as high as 120fps—numbers that seem grossly unnecessary given current hardware realities, but impressive nonetheless. And future-proofed, so you (hopefully) won’t have to upgrade your cables or connectors for some time.

HDMI 2.1 also brings a number of other A/V features and enhancements, including:

- Dynamic HDR, which is capable of changing HDR settings on a frame-by-frame basis.

- Enhanced Audio Return Channel (eARC), which enables the use of object-based surround sound formats, such as Dolby Atmos.

- Variable Refresh Rate (VRR), Quick Frame Transport (QFT) and Auto Low Latency Mode (ALLM), which are helpful for video games since they reduce input lag, latency, and refresh rate for smoother, more accurate gameplay.

- Quick Media Switching (QMS), which removes the delay when switching between resolutions and frame rates.

Aside from the higher signal bandwidth and new features, the other notable difference between HDMI 2.0 and 2.1 is that 2.1 will require new cables—something HDMI 2.0 mercifully did not, despite being a massive jump from HDMI 1.4.

Advertisement

These new cables, which are being called “ultra high speed” cables, are what enable the higher resolutions and refresh rates, but you don’t need to worry about buying them any time soon. Ultra high speed cables will only be required for the higher resolutions and framerates, while the additional HDMI 2.1 features (like eARC, Dynamic HDR, and the latency-reducing benefits) are compatible with most current HDMI cables.

Unfortunately, it will not be possible to update an existing HDMI 2.0 device to support HDMI 2.1 features via firmware or software updates; the only way to utilize HDMI 2.1’s features is by connecting an HDMI 2.1 device to an HDMI 2.1-supported TV (even if that connection is via a non-ultra high speed HDMI cable).

Advertisement

Here’s where it gets confusing, however. Depending on the device, “HDMI 2.1 support” might mean different things.

HDMI 2.1 versus… HDMI 2.1?

Technically, TV manufacturers can legally advertise that their TVs feature HDMI 2.1 ports, even if they don’t support the super-high resolutions or frame rates that HDMI 2.1 enables. The TVs just have to support some HDMI 2.1 features, and manufacturers have to be open about what their sets have and don’t have—which is precisely what you’ll be seeing from the first wave of “HDMI 2.1”-supported TVs.

Advertisement

Sure, having a truncated version of HDMI 2.1 makes sense for some TVs—you might not care if your brand-new TV can’t output in 120fps, given that there’s barely any content you can watch right now—but you’re going to want to be diligent about HDMI 2.1 marketing when you’re shopping for new TVs going forward. (That said, you probably shouldn’t buy an 8K TV in 2019, anyway.)

What’s wrong with an 8K TV?

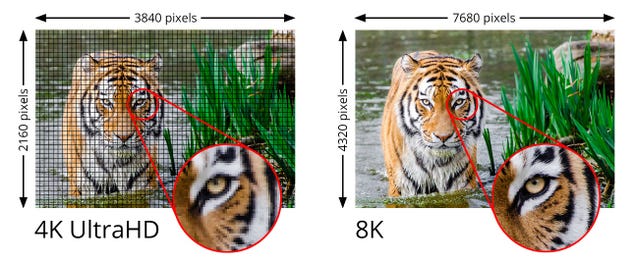

The jump to 8K Full Ultra High Def (FUHD) screens and HDMI 2.1 won’t make your current TV obsolete, since HDMI 2.0 and 4K UHD content/device are going to remain relevant for quite some time.

Advertisement

8K might sound like another exciting leap in visual fidelity, and many of the AV enhancements from HDMI 2.1 will likely be awesome, but there just isn’t enough 8K content justify the astronomical expense of an 8K TV, and there likely won’t be for quite some time. Yes, it’s the same argument everyone said about 4K TVs, but think about it: 4K content is still something of a novelty for most people, and 4K TVs are in some ways still unnecessary for many people, depending on their home setups.

That’s not to say that there isn’t any 8K content out there, but it’s paltry, with only a handful of movies and YouTube videos available. 8K Blu-rays and 8K Blu-ray players don’t yet exist, nor do 8K cable boxes or streaming devices. And even when they do, the first generation of 8K TVs likely won’t be compatible, since the initial models hitting in 2019 will probably have incomplete HDMI 2.1 support. Plus, HDMI 2.0 is capable of 8K video at 24 and 30 fps, which are the frame rates most movies and TV shows are shot in right now.

The only consumers who have a legitimate reason to upgrade to HDMI 2.1 sooner than others are gamers and hardcore home theater aficionados. Even then, most console gamers are going to have to wait for the big reveals from Microsoft and Sony—likely in 2020—which should give TV manufacturers plenty of time to pack in as many HDMI 2.1 features (and resolutions) as possible.

Advertisement

In other words, buying an 8K TV now and waiting for everything else to catch up would be a little silly; buy an 8K TV when you’re ready for an 8K TV, because you’ll have much better products to pick from then compared to now. Maybe the TVs will be a little more reasonably sized, too.

from Lifehacker http://bit.ly/2W2ZPWX

via IFTTT