NVIDIA

Attendees of this year’s NeurIPS AI conference in Montreal can spend a few moments driving through a virtual city, courtesy of NVIDIA. While that normally wouldn’t be much to get worked up over, the simulation is fascinating because of what made it possible. With the help of some clever machine learning techniques and a handy supercomputer, NVIDIA has cooked up a way for AI to chew on existing videos and use the objects and scenery found within them to build interactive environments.

NVIDIA’s research here isn’t just a significant technical achievement; it also stands to make it easier for artists and developers to craft lifelike virtual worlds. Instead of having to meticulously design objects and people to fill a space polygon by polygon, they can use existing machine learning tools to roughly define those entities and let NVIDIA’s neural network fill in the rest.

“Neural networks — specifically generative models — will change how graphics are created,” Bryan Catanzano, NVIDIA’s vice president of applied deep learning, said in a statement. “This will enable developers, particularly in gaming and automotive, to create scenes at a fraction of the traditional cost.”

Here’s how it works. Catanzano told reporters that researchers trained the fledgling neural model with dashcam videos taken from self-driving car trials in cities for about a week on one of the company’s DGX-1 supercomputers. (NVIDIA CEO Jensen Huang once called the DGX-1 the equivalent of “250 servers in a box,” so pulling off a similar feat at home seems all but impossible.)

NVIDIA

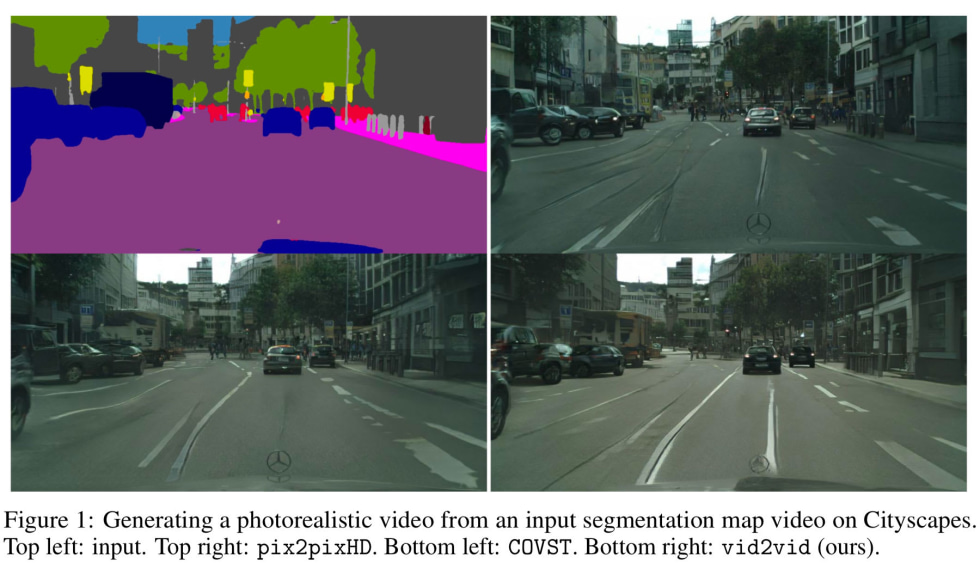

Meanwhile, the research team used Unreal Engine 4 to create what they called a “semantic map” of a scene, which essentially assigns every pixel on-screen a label. Some pixels got lumped into the “car” bucket, others into the “trees” category, or “buildings” — you get it. Those clumps of pixels were also given clearly defined edges, so Unreal Engine ultimately produced a sort of “sketch” of a scene that got fed to NVIDIA’s neural model. From there, the AI applied the visuals for what it knew a “car” looked like to the clump of pixels labeled “car” and repeated the same process for every other classified object in the scene. That might sound tedious, but the whole thing happened faster than you might think — Catanzaro said the car simulation ran at 25 frames-per-second and that the AI rendered everything in real time.

NVIDIA’s team also used this new video-to-video synthesis technique to digitally coax a team member into dancing like PSY. Crafting this model took the same kind of work as the car simulation, only this time the AI was tasked with figuring out the dancer’s poses, turning them into rudimentary stick figures and rendering another person’s appearance on top of them.

For now, the company’s results speak for themselves. They’re not as graphically rich or as detailed as a typical scene rendered in a AAA video game, but NVIDIA’s sample videos offer glimpses at digital cities filled with objects that do sort of look real. Emphasis on “sort of.” The tongue-in-cheek Gangnam Style body swaps worked a little better.

While NVIDIA has open-sourced all of its underlying code, it’ll likely be a while yet before developers will start using these tools to flesh out their next VR set pieces. That’s just as well, honestly, since the company was quick to point out the neural network’s limitations: while the virtual cars zipping around its simulated cityscape looked surprisingly true-to-life, NVIDIA said its model wasn’t great at rendering vehicles as they turn because its label maps lacked sufficient information. More troubling to VR artisans is the fact that certain objects, like those pesky cars, might not always look the same as the scene progresses; in particular, NVIDIA said those objects may change color slightly over time. These all are clearly representations of real-world objects, but they’re a long way from being photo-realistic for long periods of time.

Those technical shortcomings are one thing; it’s sadly not hard to see how these techniques could be used for unsavory purposes, too. Just look at deepfakes: it’s getting remarkably difficult to tell these artificially generated videos apart from the real thing, and as NVIDIA proved with its Gangnam Style test, its neural model could be used to create uncomfortable situations for real people. Perhaps unsurprisingly, Catanzaro largely looks on the bright side.

“People really enjoy virtual experiences,” he told Engadget. “Most of the time they’re used for good things. We’re focused on the good applications.” Later, though, he conceded people using tools like these for things he doesn’t approve of is the “nature of technology” and pointed out that “Stalin was Photoshopping people out of pictures in the ’50s before Photoshop even existed.”

There’s no denying that NVIDIA’s research is a notable step forward in digital imaging, and in time it may help change the way we create and interact with virtual worlds. For commerce, for art, for innovation and more, that’s a good thing. Even so, the existence of these tools also means the line between real events and fabricated ones will continue to grow more tenuous, and pretty soon we’ll have to start really reckoning with what these tools are capable of.

from Engadget https://engt.co/2zyHsQ7

via IFTTT