The Kinect will never die.

Microsoft debuted its motion-sensing camera on June 1st, 2009, showing off a handful of gimmicky applications for the Xbox 360; it promised easy, controller-free gaming for the whole family. Back then, Kinect was called Project Natal, and Microsoft envisioned a future where its blocky camera would expand the gaming landscape, bringing everyday communication and entertainment applications to the Xbox 360, such as video calling, shopping and binge-watching.

This was the first indication that Microsoft’s plans for Kinect stretched far beyond the video game industry. With Kinect, Microsoft popularized the idea of yelling at our appliances — or, as it’s known today, the IoT market. Amazon Echo, Google Home, Apple’s Siri and Microsoft’s Cortana (especially that last one) are all derivative of the core Kinect promise that when you talk to your house, it should respond.

Kinect for Xbox 360 landed in homes in 2010 — four years before the first Echo — and by 2011 developers were playing around with a version of the device specifically tailored for Windows PCs. Kinect for Windows hit the market in 2012, followed by an Xbox One version in 2013 and an updated Windows edition in 2014.

None of these devices disrupted the video game or PC market on a massive scale. Even as artists, musicians, researchers and developers found innovative uses for its underlying technology, Kinect remained an unnecessary accessory for many video game fans. Support slowed and finally disappeared completely in October 2017, when Microsoft announced it would cease production of the Kinect. It had sold 35 million units over the device’s lifetime.

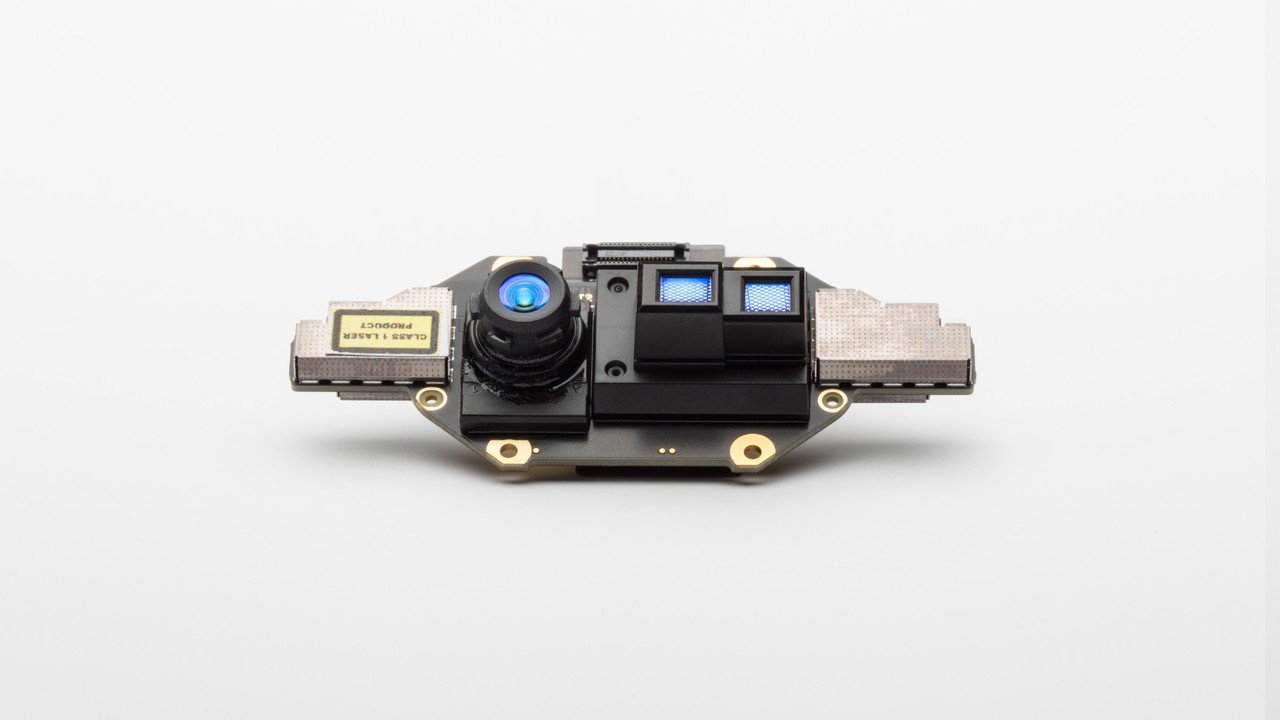

However, the Kinect lives on today in some of Microsoft’s most forward-looking products, including drones and in artificial intelligence applications. Kinect sensors are a crucial component in HoloLens, the company’s augmented reality glasses, for example. And just today, Microsoft revealed Project Kinect for Azure, a tiny device with an advanced depth sensor, 360-degree mic array and accelerometer, all designed to help developers overlay AI systems on the real world.

“Our vision when we created the original Kinect for Xbox 360 was to produce a device capable of recognizing and understanding people so that computers could learn to operate on human terms,” said Alex Kipman, Technical Fellow for AI Perception and Mixed Reality at Microsoft. “Creative developers realized that the technology in Kinect (including the depth-sensing camera) could be used for things far beyond gaming.”

While Kipman’s version of events makes it sound like the Kinect’s evolution as an AI tool was happenstance, Microsoft has long recognized video games’ impact on broader industries, and it’s not afraid to use the Xbox platform as a proving ground for new technologies. Just nine days after the first public demonstration of Project Natal in 2009, Microsoft published a presentation called Video Games and Artificial Intelligence, which dives into the myriad ways video games can be used as AI testbeds.

“Let us begin with a provocative question: In which area of human life is artificial intelligence (AI) currently applied the most? The answer, by a large margin, is Computer Games,” the presentation’s synopsis said. “This is essentially the only big area in which people deal with behavior generated by AI on a regular basis. And the market for video games is growing, with sales in 2007 of $17.94 billion marking a 43 percent increase over 2006.”

Today, the video game market is worth more than $100 billion — a figure that continues to climb year-over-year. Not only is Microsoft putting the ghost of Kinect to work in its newest AI and AR systems, but it’s planning to test the limits of its machine learning initiative within the gaming realm. During the Game Developers Conference this year, Microsoft touted some practical applications of its new Windows Machine Learning API — namely, it wants developers to use dynamic neural networks to create personalized experiences for players, tailoring battles, loot and pacing to individual play styles. Of course, Microsoft will be collecting all of this data along the way, learning from players, developers and games themselves.

Video games are the perfect proving ground for AI systems, as the industry continues to pioneer new technologies. Just take a look at virtual reality, a field that found its momentum in video games and has since exploded onto the mainstream stage. Even Microsoft’s digital assistant, Cortana, is named after a character in Halo, one of the company’s most beloved gaming franchises. Kinect was doing visual overlay and responding to audio commands years before Snapchat or an Echo came out, and now Microsoft is implementing its systems into HoloLens, the most prominent consumer-facing AR headset on the market.

“With HoloLens we have a device that understands people and environments, takes input in the form of gaze, gestures and voice, and provides output in the form of 3D holograms and immersive spatial sound,” Kipman wrote. “With Project Kinect for Azure, the fourth generation of Kinect now integrates with our intelligent cloud and intelligent edge platform, extending that same innovation opportunity to our developer community.”

At Microsoft, there’s a clear highway from game development to everyday, mainstream applications — and this road travels both ways. As video games feed the company’s AI and AR applications, serving as testing grounds for new technologies, advances in AI feed the game-development process, allowing creators to build smarter, larger, more personalized and more beautiful titles. However, a lot of this technology doesn’t end with games. More often than not, video games are just the beginning.

Click here to catch up on the latest news from Microsoft Build 2018!

from Engadget https://engt.co/2I5osef

via IFTTT